Easy way to scale your Node.js Application

Today we will be looking at how to scale your Node.js application.

Before we start, we must understand What is Scalability?

If you are running a website, web service, or application, its success hinges on the amount of network traffic it receives. It is common to underestimate just how much traffic your system will incur, especially in the early stages. This could result in a system that is not able to handle the traffic and is unable to scale. But what option do we have? That’s where horizontal and vertical scaling come in. Today we will talk about Horizontal Scaling.

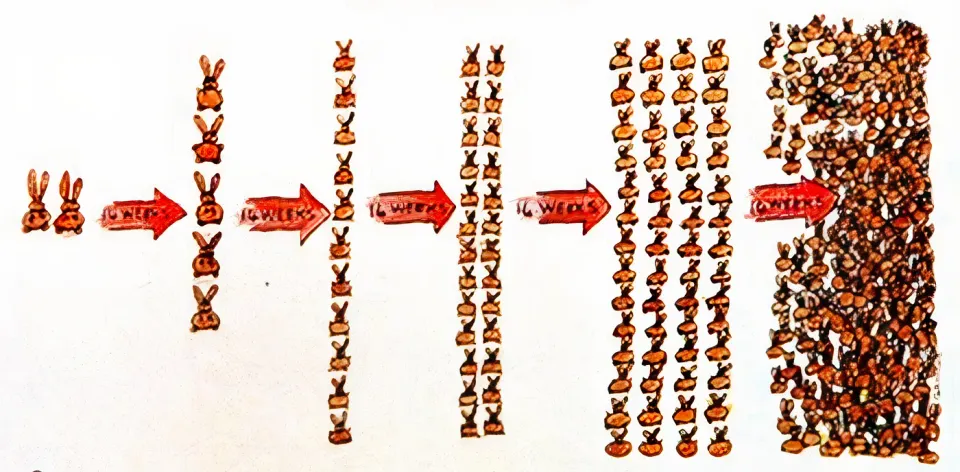

Horizontal scaling comes down to the number of instances of your application running at the same time, in a few words — duplicating your application.

#Cluster Mode:

Clusters of Node.js processes can be used to run multiple instances of Node.js that can distribute workloads among their application threads.

I would recommend use PM2 for this.

PM2 is a production process manager for applications with a built-in load balancer. It allows you to keep applications alive forever, to reload them without downtime and to facilitate common system admin tasks.

Start your application with PM2:

pm2 start server.jsBefore you start your application, you must install PM2 globally:

npm install pm2 -gI will create simple Express application to demonstrate how to scale it.

const express = require('express');

const app = express();

const port = process.env.PORT || 3000;

app.get('/', (req, res) => {

res.send('Hello World!');

});

app.listen(port, () => {

console.log(`Example app listening on port ${port}`);

});Once application are started, you can manage them easily with PM2:

By default, PM2 will start one instance of your application. Let’s scale it up to two instances:pm2 start server.js -i 2

Concept is to have multiple processes running on the same port, with some sort of internal load balancing used to distribute the incoming connections across all the processes/cores.

Sure, you can use native Cluster Module, is the basic way to scale your application.

Let’s check few another features of PM2, you can use ecosystem config file to manage your application:

module.exports = {

apps : [{

name : "app1",

script : "./app.js",

max_memory_restart: '700M',

env_production: {

NODE_ENV: "production"

},

env_development: {

NODE_ENV: "development"

}

}]

}max_memory_restart — your app will be restarted if it exceeds the amount of memory specified. Human-friendly format : it can be “10M”, “100K”, “2G” and so on. See more about PM2 ecosystem here.

#Network Load Balancing:

Few things before you implement load balancing:

- Check cron jobs to ensure that you will not run multiply instances of your jobs. Maybe you need move them to single instance.

- Check in-memory Cache, maybe you need store cache on Redis or Memcached.

- Check how you run database migrations, maybe you need to run them via Jenkins etc.

P.S. If you need more consulting about one of these topics, please leave a comment.

Lets run our application on port 3000, 3001, 3002 and 3003:

PORT=3000 pm2 start server.js -f --update-env

PORT=3001 pm2 start server.js -f --update-env

PORT=3002 pm2 start server.js -f --update-env

PORT=3003 pm2 start server.js -f --update-env

Mode explains:

Fork mode — Create a separated instance for your app with N replicas, the parameter increment_var is used for asign a unique port number to every replica avoiding port crashs.

Cluster mode — The cluster mode allows networked Node.js applications (http(s)/tcp/udp server) to be scaled accross all CPUs available, without any code modifications, the application port number is unique for the entire cluster.

Now we have 4 instances (4 cores per instance) running of our simple application.

We need to create a load balancer serves as the single point of contact for clients. Like on image: (You see on example 10 request from the client)

Nginx uses the default algorithm, Round Robin:

Round‑robin load balancing is one of the simplest methods for distributing client requests across a group of servers. Going down the list of servers in the group, the round‑robin load balancer forwards a client request to each server in turn. When it reaches the end of the list, the load balancer loops back and goes down the list again (sends the next request to the first listed server, the one after that to the second server, and so on).

Before we start configuring Nginx, let’s install Nginx.

Testing:

curl http://localhost:8080The default place of nginx.conf on Mac after installing with brew is:/opt/homebrew/etc/nginx/nginx.conf

Setup new configuration for Nginx:

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

gzip on;

upstream my_http_servers {

server 127.0.0.1:3000;

server 127.0.0.1:3001;

server 127.0.0.1:3002;

server 127.0.0.1:3003;

}

server {

listen 80;

server_name localhost;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

proxy_pass http://my_http_servers;

}

}

}

Restart Nginx:

sudo nginxOnce Nginx successfully restarts, open PM2 logs using pm2 logs all command and hit the request from the browser. You should see the request received and processed by 4 Node application servers in a round-robin manner.

Member discussion